Zippro: The Design and Implementation of an Interactive Zipper

Pin-Sung Ku, Jun Gong, Te-Yen Wu, Yixin Wei, Yiwen Tang, Barrett Ens, Xing-Dong Yang ACM Conference on Human Factors in Computing Systems (CHI), 2020 [PDF] [Video]

Motivation

Computers are becoming smaller, more ubiquitous, and progressively “weave themselves into the fabric of everyday life”, just like as Mark Weiser envisioned thirty years ago. Concepts such as smart wearables and Internet-of-Things are no long considered as future technologies by today’s standards. Research efforts never stop advancing computing technology to merge the digital world seamlessly into people’s daily lives. Innovations like digital jewelries, fabric displays, and textile sensors all exemplify such efforts.

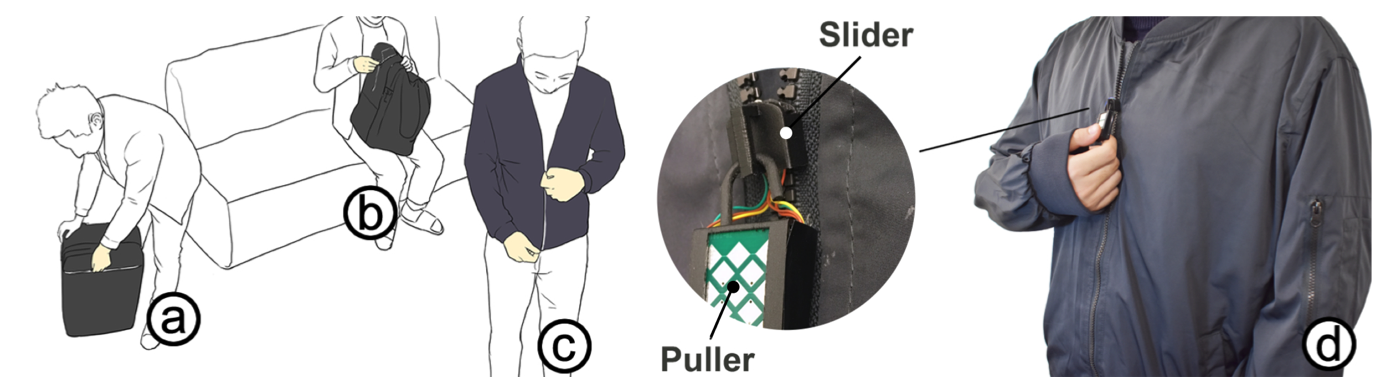

In this paper, we extend this body of research by bringing interactivity to some of the daily objects that bear zippers, like clothing (jackets and jeans) or luggage (bags and suitcases). Zippers are found everywhere in our society, so are familiar to most people and are believed to have a great potential for ubiquitous computing. As a new input channel, the zipper has potential to widen the interaction bandwidth beyond the current capabilities of ordinary objects, such as touch input on the object’s fabric surface. For example, by sensing whether the zipper is open or closed, a user can be notified if their backpack is left open when in a public setting. Alternatively, the zipper can be used as an eyes-free touch input device for subtle interactions in social scenarios. Since it will not be obvious to observers whether someone is actively using technology or just fidgeting with their zipper, this type of interaction can avoid disruption in social settings or meetings. More generally, integrating input from common zipper usage can open a door to new interactions to support daily activities.

An interactive zipper brings interactivity to daily objects, such as (a) suitcase, (b) backpack, or (c) jacket. This is enabled through (d) our self-contained Zippro prototype, which senses (1) slider location, (2) slider movement (3) hand grips, (4) tapping and swiping on the puller; and the (5) identity of the operator.

Zippro Prototype

We developed a self-contained prototype to enable some of the interactions suggested by our studies. By implementing a subset of the derived sensing options, our device can sense slider location, slider motion, hand grips, user identification, and touch input on the puller. Our goal was to show initial technical feasibility and demonstrate the use cases of an interactive zipper.

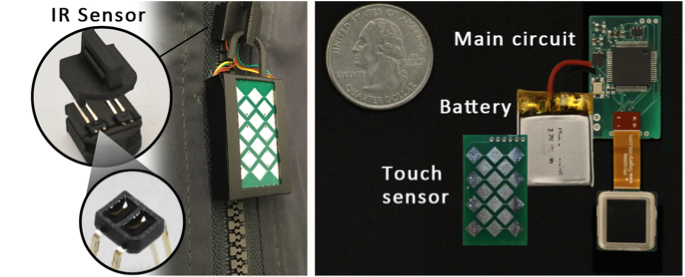

Our prototype is composed of a 3D printed slider and puller. The slider was created in size 5 (~5mm wide when teeth are closed) and can replace the existing slider on a standard zipper of the same size. The motion and travel distance of the slider was sensed by counting the number of teeth during its movement through two embedded IR reflectance sensors (QRE1113, ON Semiconductor). The IR sensors were positioned on top of each opposing row of teeth and were placed 8.5 mm apart relative to the slider’s moving direction, allowing for the detection of moving direction based on the phase difference in sensor signal. Due to this simple structure, the slider does not know its absolute position. Therefore, a calibration has to be performed in a selected origin, serving as a reference to measure the slider’s relative position. For example, the origin can be at the bottom stop and can be specified by the user (e.g., tapping the puller).

Zippro prototype, containing an IR sensor, capacitive sensor, fingerprint sensor, sensing board, and battery.

Tapping and swiping the puller were sensed through a capacitive sensor, placed on the outward-facing side of the puller. Hand grip detection and user identification were implemented using a capacitive touch fingerprint sensor (FPC1020AM, FingerPrint) placed on the inward- facing side of the puller. The sensor has a resolution of 508 dpi and can capture images of 192 × 192 pixels with 8-bit depth for an area of 16.6 mm × 16.4 mm. The fingerprint sensor captures the skin landmarks, including the fingerprint of the index finger, allowing for the system to distinguish the two grip gestures and users. We used an open source software SourceAFIS or user identification. When the system is not in use, the fingerprint sensor is kept in the deep sleep mode to save energy consumption. It wakes up upon a user touches the puller. It then captures an image of the contact area of the user’s finger, which is sent to a laptop via Bluetooth for image processing and pattern recognition.

Selected Press Coverage

Hackster.io: Presenting Ex-zippit A: The Zippro