Indutivo: Contact-Based, Object-Driven Interactions with Inductive Sensing

Jun Gong, Xin Yang, Teddy Seyed, Josh Urban Davis, Xing-Dong Yang ACM Symposium on User Interface Software and Technology (UIST), 2018 [PDF] [Video]

Motivation

Contextual interactions based on object recognition and manipulation have enormous potential in small wearable (e.g., smartwatches) or IoT devices (e.g., Amazon Echo, Nest Thermostat, Cortana Home Assistant), where input to these devices is generally difficult due to smaller form factors and the lack of effective input modalities. However, precise object recognition and lateral movement (e.g., slide, hinge and rotation) detection remains challenging.

We propose a new sensing technique based on induction, to enable contact-based precise detection, classification, and manipulation of conductive objects (primarily metallic) commonly found in households and offices (such as utensils or small electronic devices). Our technique allows a user to tap a conductive object or their finger on a device (e.g., a smartwatch) to trigger an action. Once the object is detected, the user can use it for continuous 1D input such as sliding, hinging, or rotating, depending on its physical affordance.

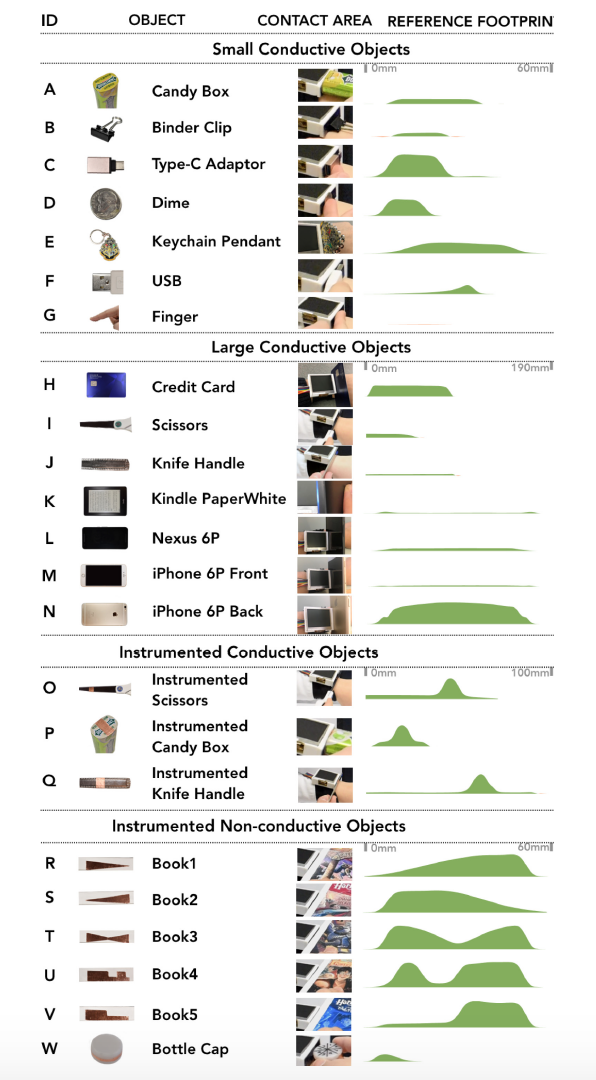

Object Recognition

We tested 23 objects, classified into four types: large or small conductive objects and instrumented conductive or non-conductive objects. Large conductive objects are objects whose contact area is larger than the sensor. Some are metallic, while others are electronic devices with built-in metallic components. For small conductive objects, the contact area is smaller than the sensor. Instrumented conductive objects are conductive objects with a contact area instrumented using a strip of copper tape that is 10 mm wide. Instrumented non-conductive objects are non-conductive objects with the contact areas instrumented using copper tape with different patterns.

Our system achieved an overall accuracy of 95.8% (s.e. = 0.81%). Among all tested objects, 21 achieved an accuracy higher than 90%, despite purposeful inclusion of experimental procedures that typically impact recognition accuracy – no per-user calibration, no user training, and considerable time separation between the experiment and when the reference data was collected – which is very promising.

1D Object Manipulation

Sliding: We used average error distance to measure the sliding accuracy. The results revealed that the average error distance across all tested objects was less than 1 mm (e.g., 0.82 mm; s.e. = 0.17 mm).

Hinge: We used average error degree to measure the hinging accuracy. The average error degree across all three tested objects was 1.64° (s.e. = 0.37°).

Application

Our first application is a video player, which shows Forward, Play/Pause, and Backward buttons on the eastern side of the screen. Tapping a dime on the sensor at the appropriate location near a button, triggers the corresponding action (Figure a). This helps avoid a finger occluding the screen and false input from a hand accidentally touching the sensor. Our second application is a top-down aircraft game. With this game, the instrumented bottle cap can be used to launch the app and as a rotating controller to steer the aircraft (Figure b). Using this approach, the screen space will not be occluded by a controller on the interface or a user’s finger. This example shows that a user can use cheap objects and materials to create their own novel smartwatch controllers or input devices. Our third application is a brick breaker game. Like many other games, this game is difficult to play on a smartwatch due to a finger occluding the screen space when dragging a paddle. We show that the paddle can be precisely positioned using a binder clip as a physical handle (Figure c). The fourth application associates a user’s books (or associated conductive markers) with audio copies stored on their smartwatch. A user can tap the book on the smartwatchto play the audio or download it if it doesn’t exist on the smartwatch (Figure d). This provides an alternative means to navigating and searching for the desired audio to play. The fifth application is a fitness app, which encourages the user to enter calorie information during a meal. With our app, the user can enter an estimated calorie value by hinging the handle of a table knife, to avoid touching the screen when using a finger that is messy from eating their meal (Figure e). Finally, switching between different modes on a smartwatch can be slow on the current smartwatches. With our last application, a user can use the pendant of their car keys to quickly activate voice mode on the smartwatch before starting a vehicle (Figure f).

Selected Press Coverage

EurekAlert: Energy harvesting and innovative inputs highlight tech show gadgetry

Dartmouth Press: Dartmouth labs bringing the latest in developmental consumer tech to UIST 2018